It's a sunny morning on Thursday, and like before I haven't caught a wink of sleep. Will I be able to look sick enough to get out of work early today as well? I don't know. At least I finally manages to be productive in this particular insomniac binge.

I'm in a very complex love-hate relationship with my laptop. I like it for being retro in a strangely stylish way. I like it's awesome keyboard that's actually a step above pratically all the competition out there. I like how the thing had been running on battery power since one in the morning and now it's... 7:30 in the morning. Pretty darn durable for a laptop with dedicated graphics card.

But then there are some serious issues with this machine. Like the irrational behavior of some of the lenovo patched drivers in sleep-wake cycle. Or how my wallpaper disappears whenever I use the battery stretch mode. Most of all, I hate how flaky the ATI driver for the dedicated graphics card on this machine is. It gave me two BSoD last night due to amdkmp driver crash (that Lenovo's been 'working on' since last year at least) and another one as a sort of graphics driver related cascading failure showing the dreaded NMI/memory parity error. The same exact BSoD message I received before my last dell's motherboard fried to a crisp due to faulty die casting of the GPU. I never get any errors when I'm using the intel integrated graphics mode which probably uses 4500HD chipset, but why should I settle for the crappy integrated chip when I paid good money for dedicated graphics solution? If I wanted a laptop that will just run off of intel IGP I would have bought much cheaper, and light laptop... Though to be fair cheap/light laptop with 1440x900 resolution is a rarity these days for some reason. The manufacturers including Apple are still sticking with crummy 1280x800 resolution for ~13in screen solutions. Way behind the times those people.

Ok, I'll be honest. This laptop's still pretty good running on intel IGP. Things I do for work don't usually need dedicated GPU unit with separate ram. They need processing power, and this 2.5GHz core 2 duo machine packs enough wallop to blast most consumer class desktops out of the water. I'm just pissed that I can't play any games on this machine without risking the whole OS going down in blue flames... To be fair I haven't been playing much of anything these days, and I certainly haven't been playing anything that would actually need the punch offered by a dedicated graphics card, but still, I'd like to keep my options open. In fact, only three reasons stopped me from purchasing a new Aluminum MacBook instead of a thinkpad. Screen resolution, lack of SD card drive, and dedicated graphics solution. Well since the macbooks coming out right now have much better GPU with SD card drives to boot, not to mention phenomenal battery life estimated at around 6~7 work hours, the only thing Thinkpads have going for them is the screen resolution, something that can be managed if you're an external monitor kind of person.

With the unstable graphics card giving me grief, I keep on thinking about bringing another gadget into my life. Maybe a new netbook (the ones on the market today lasts for upto 10.5 hours per charge). The 701's getting really old and it's a real pain to type up a full report on that keyboard. I can manage, but it makes my fingers feel like I've been playing on the piano for hours. While a new netbook would certainly be nice (especially since even the worst netbook out there can run starcraft on it, thus satiating some of my entertainment needs), I'm not sure this is a good time to buy a new system though. The Nvidia ION is just around the corner and there is the disturbing rumor of the Apple tablet coming out as early as September or possibly this winter season.

Oh yes, the Apple tablet. People had been dreaming of it for a few decades now, ever since the Newton died. If Apple pulls it off there's a very good chance that I'll end up with one of those things, especially considering the wealth of science applications on iTunes Store at the moment. Some of the applications like the Papers are a godsend to anyone in academic profession. And I know for certain that Drew Endy et al are planning an iPhone-OS based mobile version of the biobuilder platform, which is a beginner friendly yet heavy duty synthetic biology CAD program that integrates into regular computer based distributions... Yeah, even speaking without gadget lust there's a good chance I'll get a touch or a tablet in the near future, since my professin almost seem to require having it for some reason these days. Kind of understandable when you think about it. The last time academic profession saw some mobile platform that was reliable and consistent enough for field/lab deployment was close to ten years ago, when the term PDA was new and Palm ruled the Earth.

On the other note (what are rant posts without multiple number of topics to dazzle the readers' minds?), only 95 days until Nanowrimo. I'm definitely participating this year, with my trusty laptop and all. I even have most of the rough draft and settings lined out in clean text based wiki format. I didn't expect myself to be able to come up with such awesome ideas, but I think I might have hit the real jackpot. I haven't read anything even remotely close to it for years. Very hundred-years-of-solitude-y. With some undeniable influence from all the Japanese light novels I've been force fed over the years.

I'm really looking forward to it.

Well, time to get to work!

Thursday, July 30, 2009

Wednesday, July 29, 2009

Rainy day

Thanks to the wooziness induced by the late night last night, I was able to get off from work way early today. If this can keep up with my schedule I might as well sleep late every day. It's good to be outside and free when the sun is shining, except that it's not quite the case right now.

After days of half-formed rainstorms that only lasted an hour or two, damning the whole of the city into the pre-rainstorm humidity and heat that would make Tokyo proud, it's finally pouring down. I don't know whether to feel happy or sad about this. Certainly I've been waiting for a decent rainstorm for a while now, with thunder and lightning. But why does it have to be the day I could have taken my laptop out to the park to get some personal workspace? The world works in really strange ways.

With the rain, and with my brain still a little soggy from lack of sleep and rest, I just came back home right away instead of hanging around the city to do whatever. I could have spent some much needed (and decidedly cooler) time in bookstores in the area, but I didn't feel up to it. Maybe it's the weather.

So now I'm sitting in the sofa in my room, looking out the window being riddled with raindrops, wondering what to do with this unexpected freetime. I've already read most of the books in my personal library a few times. There might be movies in hard drives that I could be watching but I don't like being so passive when I'm feeling tired and under the weather. Yes, I'd rather act opposite of my mood and condition. Otherwise there's no end to the depths I might fall to.

Maybe I can try playing some games? I've already burned through my collection of Deus Ex mods couple of times before, so that's rather out of the question. I don't feel like exploring synthetic biology right now, since while I'm looking for something involved, I don't want to wreck my brain over other stuff, just not right now. Maybe I can look into some mmorpg options? Like one of those free to play games that are all the rage these days.

Online games are one of those interesting things in life that has just so much potential to be awesome, but never is. It's like looking at a seed that continuously ends up dying instead of blooming into the amazing flower we were all promised. Take a look at the .hack// franchise on the playstation consoles for example (actually now that I think about it they only came out for PS2, with final one being promised for PSP). Now THAT's how the mmorpg games should be. Except that .hack// games aren't mmorpgs. It's what they call a simulated mmorpg with simulations of real people populating a virtual server that exists within the game. The game even has a virtual operating system with virtual web browser and virtual email client, with unreal people sending you email during your virtual off-time. The premises sound weird, but it works well in practice, and the franchise continued for close to a decade with one awesome anime series acting as prequel to the game (the game spanning 4 DVDs, with sequel of 3DVD lengths) with not-so awesome other things populating the marketplace (actually, one of the light novels based off the franchise is quite good. AI BUSTER 1 and 2, I personally prefer the second one). Maybe the whole faux-mmorpg setup only works precisely because none of the stuff is real. They are all made-up, make belief people living in make-belief world (oh wait, did I just describe the heart of 'real' mmorpgs as well?).

As Bernard Shaw himself have said before, hell is other people's company. This game can probably better explain the Japanese fixation with androids than any number of academic thesis out there.

Well, I think I'll stop writing for a moment and seek out some interesting mmorpg to waste time on.

After days of half-formed rainstorms that only lasted an hour or two, damning the whole of the city into the pre-rainstorm humidity and heat that would make Tokyo proud, it's finally pouring down. I don't know whether to feel happy or sad about this. Certainly I've been waiting for a decent rainstorm for a while now, with thunder and lightning. But why does it have to be the day I could have taken my laptop out to the park to get some personal workspace? The world works in really strange ways.

With the rain, and with my brain still a little soggy from lack of sleep and rest, I just came back home right away instead of hanging around the city to do whatever. I could have spent some much needed (and decidedly cooler) time in bookstores in the area, but I didn't feel up to it. Maybe it's the weather.

So now I'm sitting in the sofa in my room, looking out the window being riddled with raindrops, wondering what to do with this unexpected freetime. I've already read most of the books in my personal library a few times. There might be movies in hard drives that I could be watching but I don't like being so passive when I'm feeling tired and under the weather. Yes, I'd rather act opposite of my mood and condition. Otherwise there's no end to the depths I might fall to.

Maybe I can try playing some games? I've already burned through my collection of Deus Ex mods couple of times before, so that's rather out of the question. I don't feel like exploring synthetic biology right now, since while I'm looking for something involved, I don't want to wreck my brain over other stuff, just not right now. Maybe I can look into some mmorpg options? Like one of those free to play games that are all the rage these days.

Online games are one of those interesting things in life that has just so much potential to be awesome, but never is. It's like looking at a seed that continuously ends up dying instead of blooming into the amazing flower we were all promised. Take a look at the .hack// franchise on the playstation consoles for example (actually now that I think about it they only came out for PS2, with final one being promised for PSP). Now THAT's how the mmorpg games should be. Except that .hack// games aren't mmorpgs. It's what they call a simulated mmorpg with simulations of real people populating a virtual server that exists within the game. The game even has a virtual operating system with virtual web browser and virtual email client, with unreal people sending you email during your virtual off-time. The premises sound weird, but it works well in practice, and the franchise continued for close to a decade with one awesome anime series acting as prequel to the game (the game spanning 4 DVDs, with sequel of 3DVD lengths) with not-so awesome other things populating the marketplace (actually, one of the light novels based off the franchise is quite good. AI BUSTER 1 and 2, I personally prefer the second one). Maybe the whole faux-mmorpg setup only works precisely because none of the stuff is real. They are all made-up, make belief people living in make-belief world (oh wait, did I just describe the heart of 'real' mmorpgs as well?).

As Bernard Shaw himself have said before, hell is other people's company. This game can probably better explain the Japanese fixation with androids than any number of academic thesis out there.

Well, I think I'll stop writing for a moment and seek out some interesting mmorpg to waste time on.

Late night or early morning?

This isn't good. It's four in the morning and I still can't get to sleep. I'm currently running some good lounge music in the background with the evil alchemy of the internet radiostation, courtesy of the smoothjazz. Thinking about things like diybio, synthetic biology, artscience and upcoming nanowrimo competition, which I plan on participating this year. Only 96 days left to go. I'm thinking of telling bunch of my friends to register just to see if they can actually do it. Yes, even the ones that can't write artsy-creative stuff to save their lives.

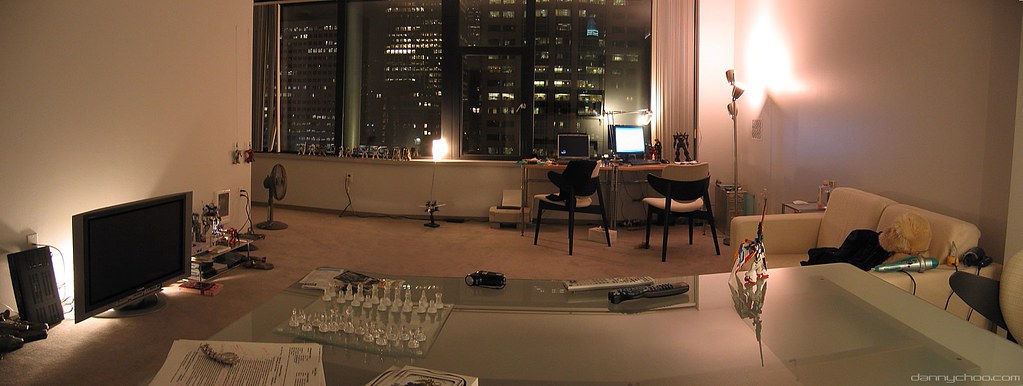

The filter for the ac might have gone bad. My throat feels sore, but if I open the window the room will get hot again, meaning more sleepless agony for me. Listening to the music and looking outside the window at all the blinking buildings in the main city down the broadway, the whole scene reminds me of setup for many classic Japanese scifi-futurescapes, of the kind that can be seen in the games like the snatcher (if you haven't played it yet, don't call yourself a gamer) and its sequel policenauts, both my favorites.

Picked this picture of an apartment in Seattle from a blog of one of my favorite otakus on the web. How long will I have to live to be able to have a view like that outside my own apartment window? Hopefully this science gig will work out better than it is now... I've never been much of a home person. I prefer cool apartment in high places surrounded by pretty lights of the city over any house any day of the week. There's some quality about those architectures that's really appealing to me... The prices of the apartments in the city are dropping across the board. Maybe I should shop around for the time when I finally get my degree and become a more or less productive member of the society.

It's always interesting that the impression I get from such semi-futuristic landscapes tend to be nostalgia of some sort.

I'm nostalgic about the future.

The filter for the ac might have gone bad. My throat feels sore, but if I open the window the room will get hot again, meaning more sleepless agony for me. Listening to the music and looking outside the window at all the blinking buildings in the main city down the broadway, the whole scene reminds me of setup for many classic Japanese scifi-futurescapes, of the kind that can be seen in the games like the snatcher (if you haven't played it yet, don't call yourself a gamer) and its sequel policenauts, both my favorites.

Picked this picture of an apartment in Seattle from a blog of one of my favorite otakus on the web. How long will I have to live to be able to have a view like that outside my own apartment window? Hopefully this science gig will work out better than it is now... I've never been much of a home person. I prefer cool apartment in high places surrounded by pretty lights of the city over any house any day of the week. There's some quality about those architectures that's really appealing to me... The prices of the apartments in the city are dropping across the board. Maybe I should shop around for the time when I finally get my degree and become a more or less productive member of the society.

It's always interesting that the impression I get from such semi-futuristic landscapes tend to be nostalgia of some sort.

I'm nostalgic about the future.

Tuesday, July 28, 2009

Another workspace pics. And other things.

As usual my blog is running a late night double feature, like how the old theaters used to do it. Or will it be a triple feature?

Here's a link to another collection of workspaces, this time workspaces for science fiction writers. All of them are quite well known. Some of them are even known to me. Although I'd have loved to be able to see actual workspace of William Gibson/Neal Stephenson/Warren Ellis with their computers as well. For reasons explained elsewhere I really dig that kind of stuff. I think Neal Stephenson is the person who taught me to take the Apple platform seriously way before it was cool to be Apple (writing this takes me back. In the past there was a time the Mac OSes were horrible systems with windows based computing platforms being the operating systems of the future. People would always get into a fuss about how the public school system was failing the children by letting them use Apple based products while the rest of the world ran on windows. They were so naive back then).

Like I guessed, writers certainly live in a whole lot of clutter. Most of them are surprisingly clean though, even when counting the fact that most of them probably cleaned up a little before the scheduled photoshoot... It's the same with research labs actually. Kid, I'm speak this from experience, so listen up. While everyone out there will tell you that a well-organized workspace/rooms etc are essential for productivity you should see the workspaces/rooms of the most brilliant people in arts and sciences. Trust me, none of them are capable of maintaining a clean room on their own. There's always some kind of mess, some kind of clutter. Ever looked at desktop of Albert Einstein? The thing is like a maze. And, I too have some clutter issues when I'm running large private projects that span months at a time. It's only that I try to clean everything up and keep them clean when I don't have anything long-term running out of my own place (I once covered a whole wall with post-its for notes and plans/numbers for my thesis (of sorts). My then-room was in a truly crazy state back then). However, despite the clutter the workspaces of people who actually work on things tend to have some weird method to their madness. For example, it's rare to see actual 'filth' among the clutter. Sure, there are notes, pieces of papers, books and gadgets everywhere. If the person is in laboratory oriented profession perhaps even some reagents. But never filth. No half-eaten food rotting away, no weird yellow/brown stuff of mysterious origin. All the clutter is information, all of them information vital to whatever he/she is doing. Food isn't information and it's not vital to finding out some new law that governs high energy plasma. Or writing science fiction. Or designing proteins to save human lives. So yeah, if you walk into a work/room of a person and smell rotten food all over the place, the chances are he/she isn't working. Just being lazy and wasting time. But if you walk into a work/room and find crazy amount of papers and scribbled pieces of stuff everywhere, don't touch anything. Those people get stuff done.

Here's a July system guide from Ars Technica aimed at building gaming machines. Even the 'value' gaming machine on here (~$900) is effectively futureproof. You'll be running contemporary games four years from now on with that kind of machinery. you can add some more oomph with careful attribution of either 4 or 8 core processors into the machine, with 16GB RAM or more. But then that would be overkill. Not only would such machine be future proof, it will be on equal standing with some of the heavier single semi-supercomputers in some labs, the kind used for rendering in-house protein calculation. Of course, machine like that will guzzle electricity so anyone who can run that kind of machine for four years is probably very rich or don't pay his/her own utility bills.

Despite the fact that most of my computing needs these days run around mobile solutions systems like that are very tempting to build. Just imagine the things I would be able to do with a graphics card with 1GB DDR3 dedicated memory with all sorts of crazy shader appliances. Not just games, mind you. With upcoming frameworks like CUDA it would be possible to offload computing intensive processed to GPU instead of running them straight out of CPU, in fact turning them into mini suprecomputers, at least compared to the puny units of our current generation. Even laptops might be able to run some serious number crunching once the system's perfected.

Here's a link to another collection of workspaces, this time workspaces for science fiction writers. All of them are quite well known. Some of them are even known to me. Although I'd have loved to be able to see actual workspace of William Gibson/Neal Stephenson/Warren Ellis with their computers as well. For reasons explained elsewhere I really dig that kind of stuff. I think Neal Stephenson is the person who taught me to take the Apple platform seriously way before it was cool to be Apple (writing this takes me back. In the past there was a time the Mac OSes were horrible systems with windows based computing platforms being the operating systems of the future. People would always get into a fuss about how the public school system was failing the children by letting them use Apple based products while the rest of the world ran on windows. They were so naive back then).

Like I guessed, writers certainly live in a whole lot of clutter. Most of them are surprisingly clean though, even when counting the fact that most of them probably cleaned up a little before the scheduled photoshoot... It's the same with research labs actually. Kid, I'm speak this from experience, so listen up. While everyone out there will tell you that a well-organized workspace/rooms etc are essential for productivity you should see the workspaces/rooms of the most brilliant people in arts and sciences. Trust me, none of them are capable of maintaining a clean room on their own. There's always some kind of mess, some kind of clutter. Ever looked at desktop of Albert Einstein? The thing is like a maze. And, I too have some clutter issues when I'm running large private projects that span months at a time. It's only that I try to clean everything up and keep them clean when I don't have anything long-term running out of my own place (I once covered a whole wall with post-its for notes and plans/numbers for my thesis (of sorts). My then-room was in a truly crazy state back then). However, despite the clutter the workspaces of people who actually work on things tend to have some weird method to their madness. For example, it's rare to see actual 'filth' among the clutter. Sure, there are notes, pieces of papers, books and gadgets everywhere. If the person is in laboratory oriented profession perhaps even some reagents. But never filth. No half-eaten food rotting away, no weird yellow/brown stuff of mysterious origin. All the clutter is information, all of them information vital to whatever he/she is doing. Food isn't information and it's not vital to finding out some new law that governs high energy plasma. Or writing science fiction. Or designing proteins to save human lives. So yeah, if you walk into a work/room of a person and smell rotten food all over the place, the chances are he/she isn't working. Just being lazy and wasting time. But if you walk into a work/room and find crazy amount of papers and scribbled pieces of stuff everywhere, don't touch anything. Those people get stuff done.

Here's a July system guide from Ars Technica aimed at building gaming machines. Even the 'value' gaming machine on here (~$900) is effectively futureproof. You'll be running contemporary games four years from now on with that kind of machinery. you can add some more oomph with careful attribution of either 4 or 8 core processors into the machine, with 16GB RAM or more. But then that would be overkill. Not only would such machine be future proof, it will be on equal standing with some of the heavier single semi-supercomputers in some labs, the kind used for rendering in-house protein calculation. Of course, machine like that will guzzle electricity so anyone who can run that kind of machine for four years is probably very rich or don't pay his/her own utility bills.

Despite the fact that most of my computing needs these days run around mobile solutions systems like that are very tempting to build. Just imagine the things I would be able to do with a graphics card with 1GB DDR3 dedicated memory with all sorts of crazy shader appliances. Not just games, mind you. With upcoming frameworks like CUDA it would be possible to offload computing intensive processed to GPU instead of running them straight out of CPU, in fact turning them into mini suprecomputers, at least compared to the puny units of our current generation. Even laptops might be able to run some serious number crunching once the system's perfected.

Monday, July 27, 2009

Late night. What to do?

Every so often I'm faced with a conundrum.

It's late night, and either I have something I need to finish before the sun rises, or I'm midst of some strange problem that just won't let me sleep, both mentally and physically. I would normally get some work done in situations like that, but for some reason I can't. There's something in my mind that just stops me from functioning normally, as if some pebble got caught between the cogwheel of my mind. I can feel the urge to do something building inside myself but I can't channel it to something more useful, the energy just disappearing like anything else that follows the course of slow, painful thermodynamic dissipation in this universe. (that makes me think. It would be so interesting to be able to come up with a model that describes human creativity as a function of the thermodynamical mechanism in the universe.)

When I'm faced with such difficult situations I usually try to do something that doesn't require much coherence yet still need some kind of input from myself. And over the years I've found writing (and sometimes drawing) to be the perfect solution for those late night blues... I also play a bit of violin (just picked up a new one a few weeks ago, in fact), but that's a difficult hobby to have in the city where the walls between the apartments are usually thin enough to be punched through (though it isn't nearly as bad as the situation in Japan).

I've picked up a few useless skill over the past few months as well. Did I ever write here about how I never learned to touchtype and how my friends were always giving me weird eye (living around geeks and geekettes have that side effect)? Well I've learned to touchtype about a few weeks ago, roughly around the same time I got my new violin. It only took me about a day or two to memorize the layout of the keys, only to be expected I guess. Considering how I lived with a computer for half my life. The rate at which I got used to writing on the keyboard without using the hunt&peck approach surprised myself a bit however. Right now I'm writing this without looking at the screen. That''s right. I'm writing this while I'm looking out the window of my room, without looking at the screen or the keyboard. Who would have thought it? Learning to type completely blind in course of a week or two.

I still need to get used to the keyboard though. I still make some odd typos and my wpm isn't all that high. Average at best. It's something I really need to work on considering the volume of writing I do on everyday basis, both for pleasure and for work.

When I'm writing things like this, all alone in my room sitting on my couch, I always play some kind of music. In fact, I can barely remember the last time I went on without playing some kind of music around me. The ipod is plugged into my ear practically every single moment I'm outside, and whenever I'm home I play a music on the speakers on my laptop or when it's late at night I use wireless headphones that plug in to the speaker port of my computer (I only use bluetooth for syncing my cellphone with my computer for some reason). Of the terabytes of data I'm sitting on vast majority of the space is taken by music from all over the world, across all sorts of genre. I have Bach, Mozart, and Beethoven, all representing their own era. I have some rock, some of them the harder variety. I also have crazy collection of jpop compilations and singles, and I have many of them in form of original cd sitting in some storage space in the city, since it was way too impractical to bring them with me in my frequent moving binge. I regularly buy musics from promising bands and composers, like the OST/inspired album for Neotokyo. It makes me look like some sort of freak in this day and age where people my age doesn't quite seem to buy anything if it's available in digital format.

Music must be one of the most fundamental invention of the humanity. Perhaps the invention of the music is the event we can clearly mark as the moment of divide between human the homosapiens and human the semi-ape. It's logical, yet impulsive. It's formless, yet the sytem that makes music come true can be observed all across the world, across the universe in weirded places, like the shape of galaxies, pulses of the stars, or patterns of moss in a forest. Music is very mathematical in that regard, and it is probably no surprise that expertise in one usually accompanies the other... There are some people who say arts are too different from the sciences for them to coexist together, but I tend to think it's only a method to cover for their own incompetence. All the greatest artists in the human history had been scientists in one form or the other, and this pathetic division that forces a child to choose between a path or arts or paths of sciences is a freakish accident of social nature that had nothing to do with the arts or the sciences themselves. I say this a lot these days, but really. One day, the future generation will look back at the state of arts and sciences today and laugh or be horrified at how crazy and irrational it all is...

Well I think I'm through venting for now. Gotta get back to work for the day ahead.

It's late night, and either I have something I need to finish before the sun rises, or I'm midst of some strange problem that just won't let me sleep, both mentally and physically. I would normally get some work done in situations like that, but for some reason I can't. There's something in my mind that just stops me from functioning normally, as if some pebble got caught between the cogwheel of my mind. I can feel the urge to do something building inside myself but I can't channel it to something more useful, the energy just disappearing like anything else that follows the course of slow, painful thermodynamic dissipation in this universe. (that makes me think. It would be so interesting to be able to come up with a model that describes human creativity as a function of the thermodynamical mechanism in the universe.)

When I'm faced with such difficult situations I usually try to do something that doesn't require much coherence yet still need some kind of input from myself. And over the years I've found writing (and sometimes drawing) to be the perfect solution for those late night blues... I also play a bit of violin (just picked up a new one a few weeks ago, in fact), but that's a difficult hobby to have in the city where the walls between the apartments are usually thin enough to be punched through (though it isn't nearly as bad as the situation in Japan).

I've picked up a few useless skill over the past few months as well. Did I ever write here about how I never learned to touchtype and how my friends were always giving me weird eye (living around geeks and geekettes have that side effect)? Well I've learned to touchtype about a few weeks ago, roughly around the same time I got my new violin. It only took me about a day or two to memorize the layout of the keys, only to be expected I guess. Considering how I lived with a computer for half my life. The rate at which I got used to writing on the keyboard without using the hunt&peck approach surprised myself a bit however. Right now I'm writing this without looking at the screen. That''s right. I'm writing this while I'm looking out the window of my room, without looking at the screen or the keyboard. Who would have thought it? Learning to type completely blind in course of a week or two.

I still need to get used to the keyboard though. I still make some odd typos and my wpm isn't all that high. Average at best. It's something I really need to work on considering the volume of writing I do on everyday basis, both for pleasure and for work.

When I'm writing things like this, all alone in my room sitting on my couch, I always play some kind of music. In fact, I can barely remember the last time I went on without playing some kind of music around me. The ipod is plugged into my ear practically every single moment I'm outside, and whenever I'm home I play a music on the speakers on my laptop or when it's late at night I use wireless headphones that plug in to the speaker port of my computer (I only use bluetooth for syncing my cellphone with my computer for some reason). Of the terabytes of data I'm sitting on vast majority of the space is taken by music from all over the world, across all sorts of genre. I have Bach, Mozart, and Beethoven, all representing their own era. I have some rock, some of them the harder variety. I also have crazy collection of jpop compilations and singles, and I have many of them in form of original cd sitting in some storage space in the city, since it was way too impractical to bring them with me in my frequent moving binge. I regularly buy musics from promising bands and composers, like the OST/inspired album for Neotokyo. It makes me look like some sort of freak in this day and age where people my age doesn't quite seem to buy anything if it's available in digital format.

Music must be one of the most fundamental invention of the humanity. Perhaps the invention of the music is the event we can clearly mark as the moment of divide between human the homosapiens and human the semi-ape. It's logical, yet impulsive. It's formless, yet the sytem that makes music come true can be observed all across the world, across the universe in weirded places, like the shape of galaxies, pulses of the stars, or patterns of moss in a forest. Music is very mathematical in that regard, and it is probably no surprise that expertise in one usually accompanies the other... There are some people who say arts are too different from the sciences for them to coexist together, but I tend to think it's only a method to cover for their own incompetence. All the greatest artists in the human history had been scientists in one form or the other, and this pathetic division that forces a child to choose between a path or arts or paths of sciences is a freakish accident of social nature that had nothing to do with the arts or the sciences themselves. I say this a lot these days, but really. One day, the future generation will look back at the state of arts and sciences today and laugh or be horrified at how crazy and irrational it all is...

Well I think I'm through venting for now. Gotta get back to work for the day ahead.

Sunday, July 26, 2009

It's almost annoying-- And pretty pictures

I keep on writing double topic posts on this blog for some reason. I think it has something to do with how it's ridiculously difficult to concentrate on something these days, with the weather, the financial situation, and very weird family matters I shouldn't even be worrying about at this age.

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

How hard it is to blog properly these days. I mean, sure it's easier than ever to type things up either using my notebook or the blackberry and publish it all right to the net, but it's just way too difficult to write a blog post with properly thought out reasoning and half-decent grammar. The problem is coherence. It's getting more difficult to write things that are coherent. Without coherence within reasoning behind the writing I might as well let my python script do the talking by linking together random words from a dictionary (now that I think about it, that might be fun. Should try it on another blog).

Due to the difficulty of writing lengthy yet coherent pieces of writing I've missed a lot of opportunities for some good posts. New developments in technology like growing of a whole rat from its iPS cell culture from another adult rat (with some issue, but that's only to be expected), or protein-induced pluripotency within cells (the actual paper I have yet to read), or even the hypothesis that emergence of life might be hardwired into the complex system that is the universe (which is something I've suspected for a long time, but this is probably the first time it's been capitalized in a popular science publication). Don't even get me started with the plethora of amazing TED talks out there that I'm just dying to share with you all.

This is one of the most annoying thing in maintaining a personal blog. Am I a content creator or am I just copy-pasting cool news of other people's accomplishments into a digital medium for further copy-pasting, like how it is with most tumblr accounts (with some notable exceptions)? I always to try to write my own stuff but then the product of such creative exercise rarely if ever looks as exciting as the discovery of quorum sensing, new take on complexity sciences, or new developments in synthetic biology...

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

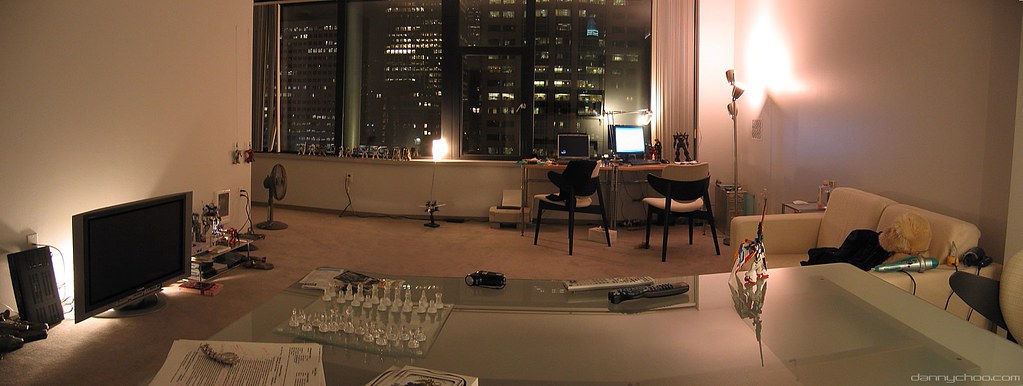

On the other note, here are some interesting pictures of other people's computer workspaces. I think I should post my multiscreen setup here sometime in the future as well.

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

How hard it is to blog properly these days. I mean, sure it's easier than ever to type things up either using my notebook or the blackberry and publish it all right to the net, but it's just way too difficult to write a blog post with properly thought out reasoning and half-decent grammar. The problem is coherence. It's getting more difficult to write things that are coherent. Without coherence within reasoning behind the writing I might as well let my python script do the talking by linking together random words from a dictionary (now that I think about it, that might be fun. Should try it on another blog).

Due to the difficulty of writing lengthy yet coherent pieces of writing I've missed a lot of opportunities for some good posts. New developments in technology like growing of a whole rat from its iPS cell culture from another adult rat (with some issue, but that's only to be expected), or protein-induced pluripotency within cells (the actual paper I have yet to read), or even the hypothesis that emergence of life might be hardwired into the complex system that is the universe (which is something I've suspected for a long time, but this is probably the first time it's been capitalized in a popular science publication). Don't even get me started with the plethora of amazing TED talks out there that I'm just dying to share with you all.

This is one of the most annoying thing in maintaining a personal blog. Am I a content creator or am I just copy-pasting cool news of other people's accomplishments into a digital medium for further copy-pasting, like how it is with most tumblr accounts (with some notable exceptions)? I always to try to write my own stuff but then the product of such creative exercise rarely if ever looks as exciting as the discovery of quorum sensing, new take on complexity sciences, or new developments in synthetic biology...

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

On the other note, here are some interesting pictures of other people's computer workspaces. I think I should post my multiscreen setup here sometime in the future as well.

There are more at this webpage. Looking at other people's workstation setup is always fascinating for me. I guess it's a kind of technofetishism/infornography that's so common these days. I know a number of people who maintain elaborate workstation environments and give them lavish names like 'the cathedral' and such (I'd much prefer the term temple or a library, but each to her own I guess). And while I don't operate anything as fancy as that except in my lab, which doesn't count since the hardwares in that place don't really belong to me (8-core with 16GB RAM, 3 screens, wowowiwa!), I understand what they are going for. With the society being built increasingly around the engines of information we call 'com-pu-ter' it's become an essential feature of any semi-viable household. All of my friends think that while it's possible to live without TV, it would be impossible to live without the access to the internet and some sort of computing device. Even the non-techie ones who can't tell the difference between Java and C++. It's a shielded environment where one can fulfill both the functions necessary to life (earning a living) and functions necessary to keep the mind alive. Through education, fun, contact with other people, and just plain-ol time wasting. So the swordsmen take meticulous care of their swords, providing lavish casing and decoration of highest materials for their tools of trade and mental compass. So many of us do the same for computers.

I would love to be able to set up a beautiful workstation like that in my own house, but it is a little difficult at the moment. I move around frequently, both in terms of going around the city for jobs and moving to another place of living for whatever the reason. So my main computer had been a laptop for a long time. And since I can't seem to completely give up the computer gaming side of myself (well, console gaming as well, with PS2 and NDS-lite, but haven't really played them for... Months) all of them were light-yet-workstation class machines with dedicated graphics solution.

Even with mobile computing, however, I still maintain something very close to what those people do with their physical workstation. In the real world I like to keep my desk area meticulously clean. Just some spare USB cables for my netbook/ereader/blackberry connection, my external HD solution totalling at close to 2TB storage space, a lab notebook (paper), and my laptop. That's it. The rest is white and wood. No carpet, no dust. It's really wonderful. You'd be surprised to know how much clean workspace contributes to productivity.

As for 'pimping out' it's usually all in the computer. Instead of buying new exotic figurines or lighting fixtures for workspace like some other people I stick with software side of things. I run custom theme that looks cool, clean, and eats through less memory than the default vista theme. I have personal organize software running on my sidebar as a separate application instead of running windows supplied sidebar, which is, while nice in functionality uses too much memory and is a possible security risk. I am also very careful about choosing my desktop background image. Being pretty isn't good enough to be chosen as my desktop background. It needs to have certain aesthetic quality that works with rest of the software platform. I'm currently running a 3D simulation of human brain neurons as my desktop background and it fits in with all the rest of the computer and my work applications perfectly. It's like the whole thing's made with each other in mind. And the desktop's just the beginning. I also pay significant amount of attention to my web browser, which is probably between the first and the fourth most used application on any computer I use. Choosing a web browser is a really complex, sometimes draining process. Not only should I be aware of the kind of aesthetic look inherent to a browser, I also need to consider their technical capacity and memory consumption. Since mobile computing is a big part of my life I really need to watch the memory and processor power consumption on all my applications. I can't have my machine run out of juice just when I'm about to deliver that paper I've been struggling with three months, you see. Web browsers serve all sort of purposes for me. It's a banking terminal. It's a programming tool. It's an entertainment machine and a terminal to a different world.

At the moment I run three web browsers on my computer. Opera 10b with Opera Unite service activated (more on that later), Firefox 3.5 with greasemonkey and all the necessities, and Google Chrome. I just can't seem to figure out which browser I like the best, but the default browser on my computer remains Firefox for its wider compatibility. Opera 10b is something of a mixed bag. I think I can write a few things that really needs to be improved with the browser but overall the build is very tight, with all sorts of different functionalities and widget availability that makes this browser feel like a separate operating system independent of windows vista it runs on. I'm also in love with the Opera Unite service that turns any instance of Opera browser into a personal webserver with configurable programs/services you can download directly off the net. I can see where this service is going and I like it. Google Chrome is something of an oddball. I liked the browser so much that I briefly used it as my default browser. It's the fastest one of the bunch and you can certainly feel the speed difference compared to all other web browsers. It's secure with the whole sandbox mechanism, perhaps even more so than other web browsers on the market. Google is working on all sorts of crazy projects to increase the functionality of the browser, and it already had significant amount of improvements built into it. Yet the interface remains minimalistic with most of the 'gears' hidden beneath the clean shell some people think is 'too clean'. I like it. It does everything I would ever want from a web browser, and it's open source with full might of Google standing behind it, meaning it's going to places with new and innovative technologies. The problem is with the memory and processing requirements of the browser. It slows my brand new laptop to a crawl when left on for a whole day or two, which is something I usually do with my computers. Opera and Firefox so far doesn't seem to suffer from that problem.

Writing about all these things makes me feel like a geek, or an otaku of sorts. Definitely vast majority of people out there usually don't bother with theming their operating system or figuring out the perfect color sheen of the desktop wall paper or worry about ACID3 test results on their browsers. I guess I am a semi-otaku of sorts. Otaku meaning person obsessed with information, may it be about newest anime or computer technology, biotechnology or robotics. Infornography seem to be the description of how otakus treat information... More on that later.

I would love to be able to set up a beautiful workstation like that in my own house, but it is a little difficult at the moment. I move around frequently, both in terms of going around the city for jobs and moving to another place of living for whatever the reason. So my main computer had been a laptop for a long time. And since I can't seem to completely give up the computer gaming side of myself (well, console gaming as well, with PS2 and NDS-lite, but haven't really played them for... Months) all of them were light-yet-workstation class machines with dedicated graphics solution.

Even with mobile computing, however, I still maintain something very close to what those people do with their physical workstation. In the real world I like to keep my desk area meticulously clean. Just some spare USB cables for my netbook/ereader/blackberry connection, my external HD solution totalling at close to 2TB storage space, a lab notebook (paper), and my laptop. That's it. The rest is white and wood. No carpet, no dust. It's really wonderful. You'd be surprised to know how much clean workspace contributes to productivity.

As for 'pimping out' it's usually all in the computer. Instead of buying new exotic figurines or lighting fixtures for workspace like some other people I stick with software side of things. I run custom theme that looks cool, clean, and eats through less memory than the default vista theme. I have personal organize software running on my sidebar as a separate application instead of running windows supplied sidebar, which is, while nice in functionality uses too much memory and is a possible security risk. I am also very careful about choosing my desktop background image. Being pretty isn't good enough to be chosen as my desktop background. It needs to have certain aesthetic quality that works with rest of the software platform. I'm currently running a 3D simulation of human brain neurons as my desktop background and it fits in with all the rest of the computer and my work applications perfectly. It's like the whole thing's made with each other in mind. And the desktop's just the beginning. I also pay significant amount of attention to my web browser, which is probably between the first and the fourth most used application on any computer I use. Choosing a web browser is a really complex, sometimes draining process. Not only should I be aware of the kind of aesthetic look inherent to a browser, I also need to consider their technical capacity and memory consumption. Since mobile computing is a big part of my life I really need to watch the memory and processor power consumption on all my applications. I can't have my machine run out of juice just when I'm about to deliver that paper I've been struggling with three months, you see. Web browsers serve all sort of purposes for me. It's a banking terminal. It's a programming tool. It's an entertainment machine and a terminal to a different world.

At the moment I run three web browsers on my computer. Opera 10b with Opera Unite service activated (more on that later), Firefox 3.5 with greasemonkey and all the necessities, and Google Chrome. I just can't seem to figure out which browser I like the best, but the default browser on my computer remains Firefox for its wider compatibility. Opera 10b is something of a mixed bag. I think I can write a few things that really needs to be improved with the browser but overall the build is very tight, with all sorts of different functionalities and widget availability that makes this browser feel like a separate operating system independent of windows vista it runs on. I'm also in love with the Opera Unite service that turns any instance of Opera browser into a personal webserver with configurable programs/services you can download directly off the net. I can see where this service is going and I like it. Google Chrome is something of an oddball. I liked the browser so much that I briefly used it as my default browser. It's the fastest one of the bunch and you can certainly feel the speed difference compared to all other web browsers. It's secure with the whole sandbox mechanism, perhaps even more so than other web browsers on the market. Google is working on all sorts of crazy projects to increase the functionality of the browser, and it already had significant amount of improvements built into it. Yet the interface remains minimalistic with most of the 'gears' hidden beneath the clean shell some people think is 'too clean'. I like it. It does everything I would ever want from a web browser, and it's open source with full might of Google standing behind it, meaning it's going to places with new and innovative technologies. The problem is with the memory and processing requirements of the browser. It slows my brand new laptop to a crawl when left on for a whole day or two, which is something I usually do with my computers. Opera and Firefox so far doesn't seem to suffer from that problem.

Writing about all these things makes me feel like a geek, or an otaku of sorts. Definitely vast majority of people out there usually don't bother with theming their operating system or figuring out the perfect color sheen of the desktop wall paper or worry about ACID3 test results on their browsers. I guess I am a semi-otaku of sorts. Otaku meaning person obsessed with information, may it be about newest anime or computer technology, biotechnology or robotics. Infornography seem to be the description of how otakus treat information... More on that later.

Saturday, July 25, 2009

Wake-up call. Change the world.

Just a rough draft of something I've been thinking about a lot lately... It's good to be able to do some draft publishing before releasing things as full version. Normally I would do this kind of thing on my handset, but why type away on the miniscule keyboard when I can write in comfort of my own laptop, courtesy of the free wifi access points throughout the city? (which is truly marvelous. Not that many major cities in the world offer muni-supported wifi access points. Like Japan for example. Those people are obsessed with getting paid for letting people browse on their wifi spots)

As usual, I'm busy with all sorts of studying and jobs to keep myself alive. If I've realized one thing abut myself over the course of the years, it's that I count curiosity and pursuit of ever greater 'stuff' of the world to be an integral part of the human existence. I probably can't live without being able to learn more things and step closer and closer to the edge of the world. Sure, food and shelter are about the only things a human being needs to survive directly, but if that were the case the ideal lifestyle would be being locked up in municipal insane asylum, wouldn't it? Freedom of mind and body is just as integral to a living existence as much as immediate nourishments and protection from elements of the world. It sounds obvious when laid out like this but there are surprisingly many people who think otherwise. People with power who effect lives of other people. Goes to show how sane this world is, doesn't it?

I've been looking into more of the synthetic biology stuff, making use of the relatively ample free time made available to me during the summer. I think I'm beginning to come up with a tangible idea and time line for the impending DIY-bio artificial/synthetic cell project. I still don't know if the other members of the NYC group will approve, all I can do is to work on the stuff until it's just as realistic as getting off the couch and going out for an ice cream. As long as I keep the target relatively simple, like having functional DNA snippets within an artificial vesicle, it might work with standard BioBrick parts... Just maybe.

I've been watching some old hacker movies lately. Or should I say that a friend of mine had been having a screening of sorts for the past few weeks? And I just can't believe what kind of cool things those movie hackers were able to pull off with their now-decades-old computers and laptops. Computers with interfaces and hardware that exudes that old retro feel even across the projection screen. I know a lot of people with brand-spanking new computers with state-of-the-art hardwares and what they usually do, or can do with those machines aren't as cool as the stuff on the movies being pulled off with vastly inferior hardware and network access. Of course, like everything in life it would be insane to compare the real with the imagined, and Hollywood movies, especially the ones made during the days when computers were still new and amazing pieces of specialty gadget, have a bad tendency to exaggerate and blow things out of proportion (I'm just waiting for that next dumb movie with synthetic biology as a culprit, though it might not happen since Hollywood's been barking about decency of genetic engineering technology for over a decade now). Even with that in mind, I can't help but to feel that the modern computerized society is just way too different from the ones imagined by artists and technologists alike during those days.

Ever heard of younger Steve Jobs talking in one of his interviews? He might have been a bastard but he certainly believed that ubiquitous personal computing will change the world for the better. Not one of those gradual, natural changes either. He actually believed that it's going to accelerate the advancement of humanity in the universe very much like how Kurzweil is preaching about the end of modernity with the upcoming singularity of technologies. Well, personal computing is nothing new these days. It's actually quite stale until about a few months ago when people finally found out glut-ridden software with no apparent upgrade in functionality were bad things, both in terms of environment and the user experience. Ever since then they've been coming out with some interesting experiments like the Atom chipset for netbooks (as well as netbooks themselves), and Nvidia Ion system for all sorts of stuff I can't even begin to describe. And even with the deluge of personal computing and personal computing oriented changes in the world we have yet to see the kind of dramatic, real, intense change we were promised so long ago. Yeah sure, the world's slowly getting better. It's all there when you take some time off and run the real numbers. It's getting a little bit better as time goes on, and things are definitely changing like some slow-moving river. But this isn't the future we were promised so long ago.

We have engines of information running in every household and many people's cellphones right now. What is an 'engine of information?' It refers to all sorts of machinery that can be used to create and process information content. Not just client-side consumption device where the user folks money over to come company to get little pieces of pixels or whatever, but real engines of information that's capable of creating as well as consuming. It's like this is the Victorian Era, and everyone had steam engine built into everything they can think of. Yet still nada. Nothing. Zip. The world's rolling at the same pace as before and most people still think in the same narrow minded little niches of their own. What's going on here? Never had such a huge number of 'engines' beyond the expansion of the humanity in history been available to so many people at once. And that's not all. Truly ubiquitous computing made available by advances in information technology is almost here, and it is very likely that it will soon spread to the poorer parts of the world in similar fashion as is with the large cities of the G8 nations.

But yet again, no change. No dice. Again, what's happening here, and what's wrong with this picture? Why aren't we changing the world using computers at vastly accelerated rate like how we changed the world with rapid industrialization? That's right. Even compared to the industrialization of the old times with its relatively limited availability and utility of the steam engines we are falling behind on the pace of the change of the world. No matter what angle you take there is something wrong in our world. Something isn't quite working right.

So I began to think during the hacker movie screening and by the time the movie finished I was faced with one possible answer to the question of how we'll change the world using engines of information. How to take back the future from spambots, 'social media gurus', and unlimited porn.

The answer is science. The only way to utilize the engines of information to change the world in its tangible form is science. We need to find a way to bring sciences to the masses. We need to make them do it, participate in it, and maybe even learn it, as outlandish as the notion might sound to some people out there. We need to remodel the whole thing from the ground-up, change what people automatically think of when they hear the term science. And tools. We need the tools for the engine of information. We need some software based tools so that people can do science everywhere there is a computer, and do it better everywhere there is a computer and an internet connection. And we need to make it so that all of those applications/services can run on a netbook spec'd computer. That's right. Unless you're doing serious 3D modeling or serious number-crunching you should be able to do scientific stuff on a netbook. Operating systems and applications that need 2GB of ram to display a cool visual effect of scrolling text based documents are the blight of the world. One day we will look back at those practices and gasp in horror at how far they held the world back from the future.

As for actual scientific applications, that's where I have problems. I know there are already a plethora of services and applications out there catering to openness and science integrated with the web. Openwetware and other www.openwetware.org/Notebook/BioBrick_Studio.html">synthetic biology associated computer applications and services come to mind. Synthetic biology is a discipline fundamentally tied to usage of computer, accessibility to outside repositories and communities, and large amateur community for beta testing their biological programming languages, so it makes sense that it's one of the foremost fields of sciences that are open to the public and offers number of very compelling design packages for working with real biological systems. But we can do more. We can set up international computing support for amateur rocketry and satellite management, using low-cost platforms like the CubeSat. I saw a launching of a private rocket into the Earth's orbit through a webcam embedded into the rocket itself. I actually saw the space from the point of view of the rocket sitting in my bedroom with my laptop as it left the coils of the Earth and floated into the space with its payload. And this is nothing new. All of this is perfectly trivial, and is of such technical ease that it can be done by a private company instead of national governments. And all the peripheral management for such operations can be done on a netbook given sufficient degree of software engineering feat. There are other scientific applications that I can rattle on and on without pause.... So why isn't this happening? Why aren't we doing this? Why are we forcing people to live in an imaginary jail cell where the next big thing consists of scantily clad men/women showing off their multi-million dollar homes with no aesthetic value or ingenuity whatsoever? Am I the only one who thinks the outlook of the world increasingly resembles some massive crime against humanity? It's a crime to lock up a child in a basement and force him/her to watch crap on T.V., but when we do that to all of humanity suddenly it's A-OK?

We have possibilities and opportunities just lying around for the next ambitious hacker-otaku to come along. But they will simply remain as possibilities unless people get to work with it. We need softwares and people who write softwares. We need academics willing to delve into the mysterious labyrinths of the sciences and regurgitate in user-friendly format for the masses to consume, with enough nutrient in it that interested people can actually do something with it.

This should be a wake-up call to the tinkerers and hackers everywhere. Stop fighting over which programming language is better than what. Stop trying to break into facebook accounts of whoever the snotty-nosed brat is. Get off your fat sarcastic asses and smell the coffee.

Get to work.

Change the world.

Tuesday, July 14, 2009

VirtualBox and other things

These days I see less and less utility of full sized laptops as a media tool, since it is technically possible to do most of the basic multimedia and creative works on a well-equipped mobile. I've been watching quite a lot of youtube videos using my blackberry which is surprisingly usable despite its limited memory and processing power. This is with a mobile that's already outdated and in process of being phased out, so the experience is only better on higher end handsets coming out these days... It's a little weird to have access to all the online research papers, video journals and dubbed animes (subtitles will turn me blind on this screen), not to mention IRC and instant messaging clients on the palm of my hand. The ubiquity of information is addictive when you're sufficiently exposed to it and that is a lesson the mobile/connectivity corporations should do well to remember. (That makes me think, the mobile market trend today might have turned out a lot differently if some innovative company could be in charge of both communications infrastructure and handset design, or even, handset design without having to worry about the politics of communications infrastructure. I played around a bit with virtualbox this afternoon. Usually I run vnc within windows to access alternative platforms but thought it would be interesting to be able to run a minimalistic linux/UNIX os within windows installation. I used #! linux, and the whole process took about 5 minutes. It's remarkable how simple it is compared to only a year or so ago. The virtualbox still has a bit of issue in resolving graphics driver that displays resolution higher than 800x600 so I had to do some manual tweaking that involved hosing down one system installation and reverting back to a previous session and reinstalling guest permissions/editing xorg.conf, but compared to some other installations it was all a minor hurdle. ...

More stuff on the state of minimal cell research.

Sent via BlackBerry by AT&T

More stuff on the state of minimal cell research.

Sent via BlackBerry by AT&T

Monday, July 13, 2009

It's funny, and somewhat unfortunate that I keep choosing two different topics to cover in a single day's post. Perhaps this type of indecision is the reason behind the stagnant state of my wordpress blog, which tend to demand a bit more quality and coherent thought compared to my livejournal?

I've been watching some Hunter S. Thompson biography materials on the net for the last few days, including a biography tv show on Hulu. An interesting person who gave birth to the style of writing we now refer to as gonzo... Basically a subjective, free form exercise in journalism unrestrained by traditional format. While some might cringe of subjective journalism but then what journalism is truly objective? When you get right down to it the difference is in the language used, and gonzo style journalism never makes any pretense towards their own objectivity. The technique of allowing the reader to gain a first person account of the experience in question was revolutionary in its time and it permeates throughout all sorts of different medium today, starting from the faux-reporting seen in Warren Ellis' Transmetropolitan series, where the whole of the comic was more or less written through the eyes of Spider Jerusalem who was probably modeled after Hunter S. Thompson.

Compared to all the psychos and sickos out there Thompson certainly maintained certain method to his madness until the very end. It would have been really interesting to see what kind of things such character can do given the technological tools of the future/transhumanism. Maybe he might have ended up blowing his head off all the same due to psychological burdens?

I've been having some rekindled interest in Lovecraftian writing recently, mostly due to my little toy project of making a python based program that churns out random, endless stories drawn from expressions in its database. I call it the Monkeyshaker 1000, from an acquaintance's suggestion that 1000 monkeys typing randomly into a typewriter might really end up producing a Shakespear. I've been thinking of all sorts of different things for the program to draw upon and create, and the answer's one of the two.

1)Scientific literature that draws on official (meaning verified, unlike the heap of steaming #$%! we call wikipedia) databases on the net to produce comprehensive reports on rather meaningless, machine dictated topics.

2)Creator of cheap knockoff novella, the kind of stories people commonly refer to as the dime store novel. Such generic novels for entertainment (paperbashing?) usually follow such vapid structure and vocabulary that I don't see much difficulty in making a program to churn out (albeit rather peculiar) pieces of short writing.

I think I'm going with number two. It is decidedly much easier than the first approach, and I already have a cool database to draw upon. The license-free works of Lovecraft. I just wonder what kind of peculiar roman the computer program will be able to come up with using a database full of antediluvian references. Maybe I can title the resulting piece as a result of gonzo journalism in an Lovecraftian universe written by some haywired android.

On the lighter note, there's a science competition going on over at the spacegeneration aimed at anyone under age of 33. You are supposed to come up with a novel method for stopping possible asteroid strike of the Earth using currently available technology or the kind of technology that can be reasonably developed in the future. Novel meaning ingenious. Not another crappy knock off of 'building a superweapon' or 'nuke it' or 'shoot a linear canon nuke' crap that every other one billion and one people proposed already with detailed drawings and technical requirements. Something really new and scientifically feasible. The contest is obviously aimed at students just starting off their interests in space engineering and astronomical sciences, so they might be willing to overlook some of the more incredible ideas, but they are still looking for something worth presenting at the science congress they are having in Korea later this year.

Maybe I should stop by Korea in autumn, see how the whole event goes. Sounds interesting.

I've been watching some Hunter S. Thompson biography materials on the net for the last few days, including a biography tv show on Hulu. An interesting person who gave birth to the style of writing we now refer to as gonzo... Basically a subjective, free form exercise in journalism unrestrained by traditional format. While some might cringe of subjective journalism but then what journalism is truly objective? When you get right down to it the difference is in the language used, and gonzo style journalism never makes any pretense towards their own objectivity. The technique of allowing the reader to gain a first person account of the experience in question was revolutionary in its time and it permeates throughout all sorts of different medium today, starting from the faux-reporting seen in Warren Ellis' Transmetropolitan series, where the whole of the comic was more or less written through the eyes of Spider Jerusalem who was probably modeled after Hunter S. Thompson.

Compared to all the psychos and sickos out there Thompson certainly maintained certain method to his madness until the very end. It would have been really interesting to see what kind of things such character can do given the technological tools of the future/transhumanism. Maybe he might have ended up blowing his head off all the same due to psychological burdens?

I've been having some rekindled interest in Lovecraftian writing recently, mostly due to my little toy project of making a python based program that churns out random, endless stories drawn from expressions in its database. I call it the Monkeyshaker 1000, from an acquaintance's suggestion that 1000 monkeys typing randomly into a typewriter might really end up producing a Shakespear. I've been thinking of all sorts of different things for the program to draw upon and create, and the answer's one of the two.

1)Scientific literature that draws on official (meaning verified, unlike the heap of steaming #$%! we call wikipedia) databases on the net to produce comprehensive reports on rather meaningless, machine dictated topics.

2)Creator of cheap knockoff novella, the kind of stories people commonly refer to as the dime store novel. Such generic novels for entertainment (paperbashing?) usually follow such vapid structure and vocabulary that I don't see much difficulty in making a program to churn out (albeit rather peculiar) pieces of short writing.

I think I'm going with number two. It is decidedly much easier than the first approach, and I already have a cool database to draw upon. The license-free works of Lovecraft. I just wonder what kind of peculiar roman the computer program will be able to come up with using a database full of antediluvian references. Maybe I can title the resulting piece as a result of gonzo journalism in an Lovecraftian universe written by some haywired android.

On the lighter note, there's a science competition going on over at the spacegeneration aimed at anyone under age of 33. You are supposed to come up with a novel method for stopping possible asteroid strike of the Earth using currently available technology or the kind of technology that can be reasonably developed in the future. Novel meaning ingenious. Not another crappy knock off of 'building a superweapon' or 'nuke it' or 'shoot a linear canon nuke' crap that every other one billion and one people proposed already with detailed drawings and technical requirements. Something really new and scientifically feasible. The contest is obviously aimed at students just starting off their interests in space engineering and astronomical sciences, so they might be willing to overlook some of the more incredible ideas, but they are still looking for something worth presenting at the science congress they are having in Korea later this year.

Maybe I should stop by Korea in autumn, see how the whole event goes. Sounds interesting.

Sunday, July 12, 2009

Wireless and junk DNAs

It's very weird how putting things of my day to day life together in title makes it sound like some other whacked out story reminiscent of Haruki and his line of chic-absurdist fictions.

I'm glad to say that after some hassle I got the wireless at my new apartment working at last. The contract for the rent actually comes with free broadband internet access, which I guess isn't too unusual in this day and age. But the thing is, the cable modem in use by the apartment I'm in is a piece of relic that came from when dial-up was still the king and people flocked to American Online services. The initial attempt at connecting the cable modem with a wireless router ended in the cable modem sending out a corrupted packet so arcane that it instantly screwed up the wireless card on my new-ish thinkpad (the roommate's Macbook and my Linux laptop were fine. Ugh, vista why do you suck so much?) to the point that I had to spend the next week figuring out how to get it to work. In the end I fixed it by deleting the device from the system panel and reinstalling it, which was something of a gamble, since according to the google it only fixed anything half the time with no-one knowing the actual reason behind the malfunction.

After my laptop got back into networking-ready shape I stuck with ethernet cable connection for a while at home since I didn't want to risk frying my wifi card again on the poisonous packets sent out by the antediluvian cable modem. I strongly suspected some sort of Lovecraftian mystery filled with murder and hideous secrets behind the nature of the unassuming block of grey plastic and was content for a while living like someone from mid 90's.

Alas, the life chained down by ethernet cable in my own home grew too vapid for me. For someone who needs good access to computer almost 24/7 to pay the bills couch/bed/front porch computing is of huge importance for me. If I'm going to be stuck in front of the screen at least let me choose the location (as a sidenote I frequently work in the park even when I'm outside, the whole sunlight/fresh air around me when I'm working does wonders for productivity). So I decided to do some real research on how to get the modem to work nicely with my wireless router.

Well, it turns out the problem was the age of the router itself. It's made from so long ago when wireless access to net was a precious opportunity for the rich and the cutting edge, it's not properly shielded from electromagnetic field of other appliances within one to three feet of its location. From there on the solution was simple. Use the ridiculously long ethernet cable I've been using for my laptop and place the modem and the router at opposite ends of the room. Funnily enough it worked and I'm writng this from my couch. I don't know whether to be happy or be infuriated by the hurdle I had to go through to get something as simple as encrypted wireless network running.

On the other note, I've been following the Dresden Codak webcomic since the days of its first inception. Even made an id on the forums, though I've only posted there a few times at best. Here's the newest comic at the site. There's something about the combination of the fantastic and the scientific in those webcomics that I find very charming. Yet unlike some other webcomics dresden codak still retains a sharp outlook on the reality that makes me wonder if the author is really drawing the future.

I really like the main character. There's something about her that's very appealing to me on some basic level I can't quite explain. Maybe it's because she's an eccentric mad scientist. And as I have stated numerous times before, everyone at their hearts secretly long to become a mad scientist. I want to be able to stable some portions of my DNA on the fly as well, provided that I have much better understanding of it mechanism and quirks than is available to the academia at the moment. I also want to ponder the questions of the universe and work at solving it or at least understanding it instead of playing second fiddle to the real researchers on the cutting edge of the humanity's learning (and someday I might be able to achieve that, if I play my cards right).